Targetless Stereo Camera Calibration

In any system with multiple sensors, unless the sensors are calibrated, the output from the sensors cannot be combined or fused to produce more meaningful data. Cameras are no exception. For example, the portrait mode photography in our smartphone cameras using multiple cameras. Just like our eyes look at the same object from two different angles (two of our eyes), two cameras rigidly mounted can look at an object and estimate how far away the object is from the camera. This is called stereo vision. This application of estimating the depth is only possible if the two cameras are calibrated.

Stereo calibration gives you the extrinsics, i.e. transformation matrices between cameras. Once you get the extrinsic, you can geometrically calculate the 3D coordinates of the points in the scene. To get the extrinsics, we need to find the image coordinates of the same object in the two camera images. This is called stereo matching. These matched coordinates are then used to calculate the extrinsics using epipolar geometry. This is an excellent video by Prof. Cyrill Stachniss.

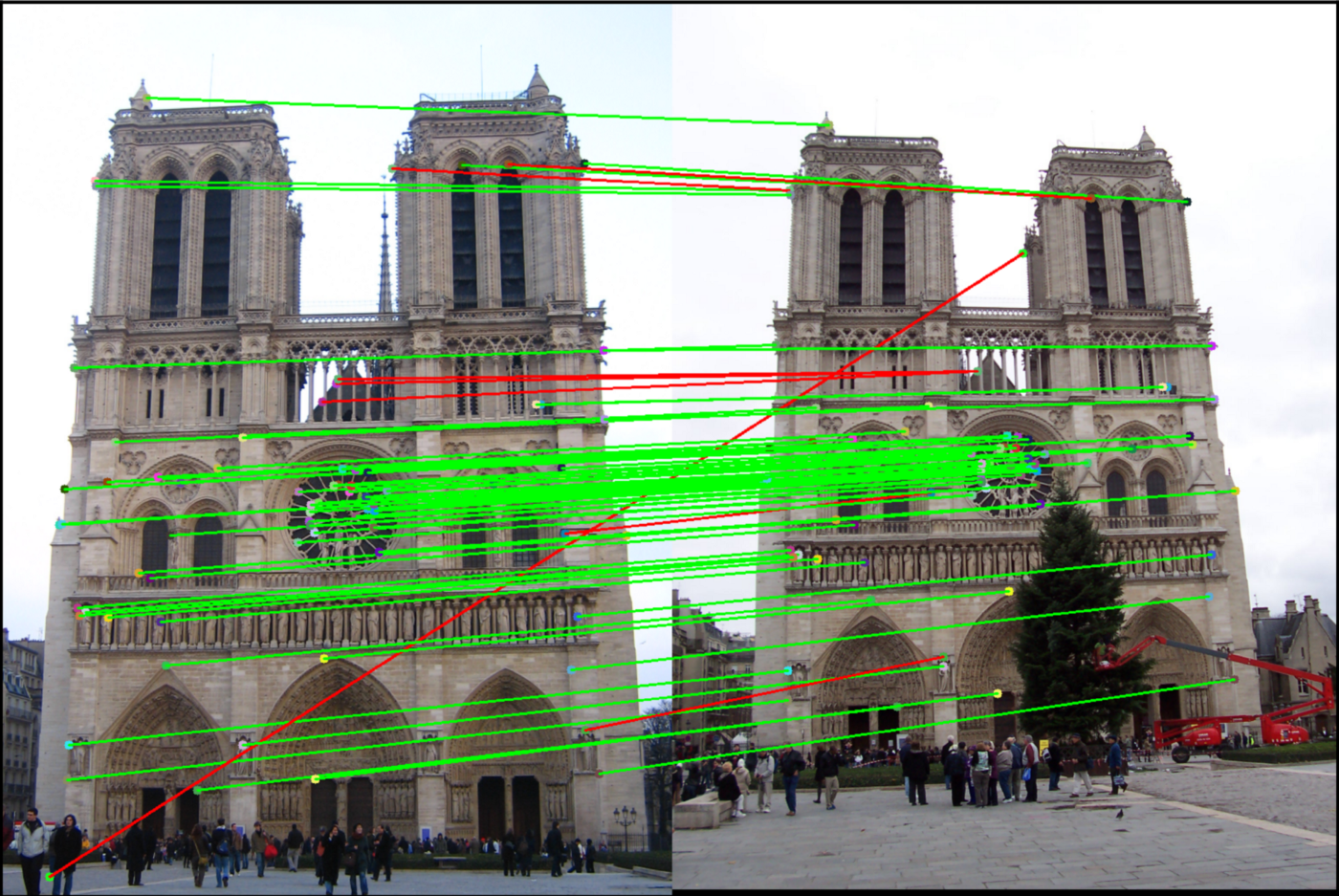

Stereo matching can be done using targets like chessboards, charuco boards etc. Since the dimensions and properties of the targets are known, this information can be used to calibrate the cameras.  However, based on the camera installation and the scene, using a target is cumbersome and sometimes even dangerous. Instead, if we can use natural scenes like a city road, parking garage or even the insides of an ordinary room, it'd be highly useful. We can extract feature points from these natural scenes and match the similar to get the stereo matching across different cameras. Once the matching is computed, the extrinsics can be calculated using epipolar geometry, much like the target based calibration.

However, based on the camera installation and the scene, using a target is cumbersome and sometimes even dangerous. Instead, if we can use natural scenes like a city road, parking garage or even the insides of an ordinary room, it'd be highly useful. We can extract feature points from these natural scenes and match the similar to get the stereo matching across different cameras. Once the matching is computed, the extrinsics can be calculated using epipolar geometry, much like the target based calibration.

The challenge is extracting and matching the feature points accurately from natural scenes. Since target-based methods have pre-determined properties, algorithms can be fine-tuned and optimized to achieve the matching. However targetless methods have to deal with a wide range of natural scenes and hence the algorithms have to be robust enough. The OpenCV library has a few feature extractors and matchers that are widely used.  With the advent of neural network models, there are now neural network models that do the same feature extraction and matching. These new neural models perform far better if trained of good datasets.

With the advent of neural network models, there are now neural network models that do the same feature extraction and matching. These new neural models perform far better if trained of good datasets.

The extrinsics of a setup can be split into two parts: a rotation matrix and a translation vector. An issue with the targetless approach is that since there is no mapping from the real-world dimension to image space (unlike target, where dimensions are known in real-world space), the distance between the two cameras (translation vector) cannot be scaled. To solve this we can manually measure the distance between the cameras and use that value to scale the translation vector. But this comes with its own problems such as measurement errors as the translation vector is the vector between the camera focus points. Since they are imaginary points, a simple scale measure between the physical camera sensors will have an error. However, if the error threshold of the your application is not in the millimeters, then the translation vector can be scaled this way.

Nevertheless, I am still exploring what can be done to solve this scaling issue. A naive approach is to have a pre-determined set of anchor-points that would be extraced by the targetless feature extractors. Then the distance between the anchor points in the real world can be used to scale the baseline distance between the cameras. However, this does not make the flow fully targetless.